Research Topics

Software systems are fundamental to modern society. With most companies organizing their economic activities around these systems, system quality has become a defining factor in corporate success. The Software Design and Analysis Laboratory develops software technologies that enable secure and reliable systems. Our work targets the building blocks of modern systems: source code, test code, networks, and infrastructure. We aim to make design, development, quality management, and operations more efficient. Below, we outline our laboratory’s research in three major areas.

AI accelerating software development

Software development continues to accelerate year by year, with AI-powered automation of development processes at its core. For example, tools like GitHub Copilot use LLMs (Large Language Models) to handle much of the programming work, bringing us closer to a world where AI takes over a significant portion of coding tasks. We are working on research to further accelerate software development by fully leveraging artificial intelligence and big data analysis beyond just programming, automating processes traditionally performed by developers across other stages of development as well.

Software development automation achieving NoOps (No Operations)

To deliver high-quality software to users in shorter timeframes, modern software development projects employ DevOps practices that automate testing and releases. For instance, continuous integration, which automates compilation and testing with every software change, is one such practice. Our lab aims to advance DevOps further, automating a broader range of operations including bug detection and correction, operational monitoring, and more, ultimately achieving NoOps where human intervention becomes entirely unnecessary.

Software analytics pursuing the ideal state of source code and projects

Modern software development leverages various systems and tools such as Git, pull requests, and bug tracking systems to improve efficiency. These systems accumulate development data, making it possible to analyze when, who, and what kind of development took place. Additionally, over 128 million repositories are publicly available on GitHub, all of which can be analyzed. The field known as software analytics aims to analyze this vast accumulation of public data and partner company data, enabling individuals and teams in software development to make better decisions. Our lab also extracts data on feature implementation, refactoring, testing, and more from development repositories, pursuing through analysis what problems developers face and how those problems should be resolved.

Research Projects

Individual research projects are shown below. You can filter the projects with keywords.

Design & Analysis of Software Process

Software Design & Analysis of Software Process Recently in software development, due to the increase in scale and complexity, thousands of developers and dozens of company are usually involved in developing a huge software system. In this research group, we aim at mitigating such complexity and exploring solutions that support developers for smoother development process. Our current research topics A Planning Support System for Quantitative Management of Software Development Process improvement in software development refers to discovering problems and providing corresponding solutions in the development process.

Software Repository Mining

Treasure digging in software development history – Repository Mining In this research, we aim at supporting software comprehension. By analyzing and arranging software development history, we propose methods to present the corresponding data. Especially, we focus on software repository which is our major data source. Software Repository refers to a development infrastructure that allows developers to collaborate among others for software development via network. It is also a collective term of version control system that manages source code, as well as a mailing list management system that keeps track of discussions among developers.

Software Analytics

What is Software Analytics Software analytics aims at helping individual or team to make better decision in software development. For this reason, software-related data such as source code or development history is analyzed. Afterwards, the corresponding result is provided in a way that can be shared among individual or team. Reference Work:Tim Menzies, Thomas Zimmermann. “Software Analytics: So What?”. In Journal IEEE Software archive Volume 30 Issue 4, July 2013 Pages 31-37.

Software for Cloud Computing

Recently, in the rapidly emerging cloud computing technology, dynamically and automatically constructing as well as allocating computing environment by virtualizing computing resource based on software technology has been gathering attentions. In our research, we aim at manipulating computing resource by utilizing software technique. Specifically, Software Defined Networking (SDN) which is a software-based networking-control technique is in our main focus. Based on SDN, we hope to extend and advance software techniques that can support cloud computing-related technologies such as Cloud Gaming, Big Data Analysis, Machine Learning, IoT and etc.

Software for High Performance Computing

Overview High-Performance Computing Systems (i.e., Supercomputers) provide massive computing performance to solve diverse problems in science and engineering. At our laboratory, we research and develop software to fully utilize the performance of state-of-the-art HPC systems. We develop massively parallel scientific applications in close collaboration with domain scientists. Furthermore, we design and implement middleware for large-scale HPC systems. Research Topics Dynamic Interconnect Control using SDN Modern HPC systems are based on the cluster architecture where multiple computers are connected through a high-performance network (i.

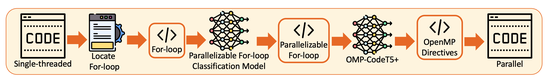

A Study on Automatic Parallelization with OpenMP using Large Language Model

To fully utilize multi-core processors, the development of parallel programs is essential. However, creating parallel programs is a challenging and complex task. Automatic parallelization techniques have been extensively studied to simplify this process by transforming sequential code into parallel code automatically. Most existing automatic parallelization tools rely on static analysis, which can identify certain types of parallel structures but fails to detect others, resulting in suboptimal performance gains. In contrast, the recent emergence of Large Language Models (LLMs) in the field of Natural Language Processing (NLP) has inspired software engineering researchers to adopt these models.

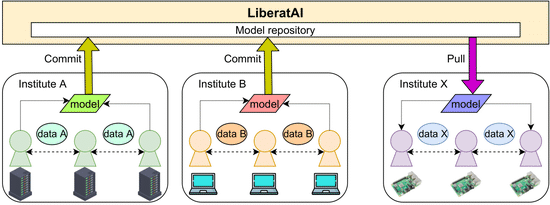

Federated Infrastructure for Collaborative Machine Learning on Heterogeneous Environments

Federated learning is a technique for training machine learning models while keeping data private. The data is kept on the devices or servers where it was collected, and only updates to the model are shared between the parties involved in the training process. This approach is effective in addressing privacy concerns and allows for a diverse dataset, with data coming from multiple sources. However, resource constraints on edge devices make it difficult for all user devices to use the same model, which could impact the model’s accuracy.

Comparative performance study of lightweight hypervisors used in container environment

Virtual Machines (VMs) are used extensively in the cloud. The underlying hypervisors allow hardware resources to be split into multiple virtual units which enables server consolidation, fault containment and resource management. However, VMs with traditional architecture introduce heavy overhead and reduce application performance. Containers are becoming popular options for running applications, yet such a solution raises security concerns due to weaker isolation than VMs. We are at the point of container and traditional virtualization convergence where lightweight hypervisors are implemented and integrated into the container ecosystem in order to maximize the benefits of VM isolation and container performance.

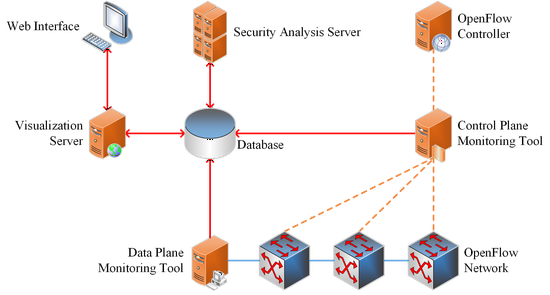

An Interactive Monitoring Tool for OpenFlow Networks (Opimon)

Software Defined Network (SDN) is an another approach to networking that realizes network programmability and dynamic control over the network. OpenFlow is a de facto standard protocol used to implement an SDN. However, understanding the dynamic behavior of an OpenFlow network is challenging since the information about the operation is distributed across numerous network switches. Opimon (OpenFlow Interactive Monitoring) is developed for monitoring and visualizing the network topology and flow

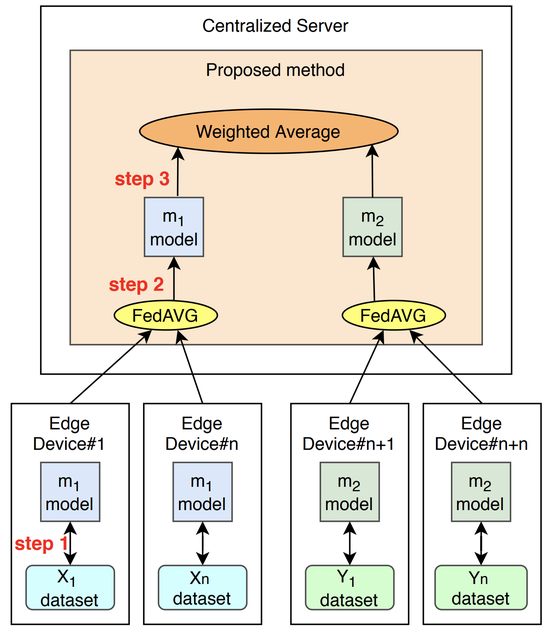

Ensembling Heterogeneous Models for Federated Learning

Federated learning trains a model on a centralized server using datasets distributed over a large number of edge devices. Applying federated learning ensures data privacy because it does not transfer local data from edge devices to the server. Existing federated learning algorithms assume that all deployed models share the same structure. However, it is often infeasible to distribute the same model to every edge device because of hardware limitations such as computing performance and storage space.

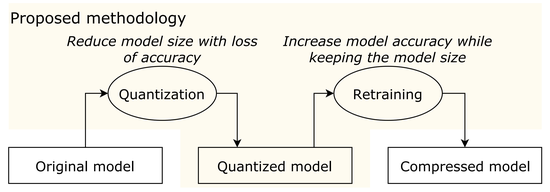

Retraining Quantized Neural Networks without Labeled Data

Running neural network models on edge devices is attracting much attention by neural network researchers since edge computing technology is becoming more powerful than ever. However, deploying large neural network models on edge devices is challenging due to the limitation in available computing resources and storage space. Therefore, model compression techniques have been recently studied to reduce the model size and fit models on resource-limited edge devices. Compressing neural network models reduces the size of a model, but also degrades the accuracy of the model since it reduces the precision of weights in the model.

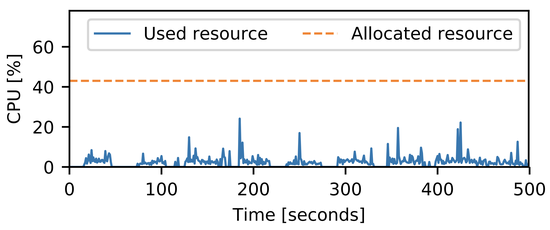

Improving Resource Utilization of Data Center using LSTM

Data centers are centralized facilities where computing and networking hardware are aggregated to handle large amounts of data and computation. In a data center, computing resources such as CPU and memory are usually managed by a resource manager. The resource manager accepts resource requests from users and allocates resources to their applications. A commonly known problem in resource management is that users often request more resources than their applications actually use.

A Hybrid Game Contents Streaming Method to Improve Graphic Quality Delivered on Cloud Gaming

Background In recent years, Cloud Gaming, also regarded as gaming on demand, is an emerging gaming service that envisions a promising future of providing million clients with novel and highly accessible gaming experiences, as it has been an active topic both in industries and research fields recently. Compared to Online Game which only stores game status, Cloud Gaming takes more advantages of cloud infrastructures by leveraging reliable, elastic and high-performance computing resources.

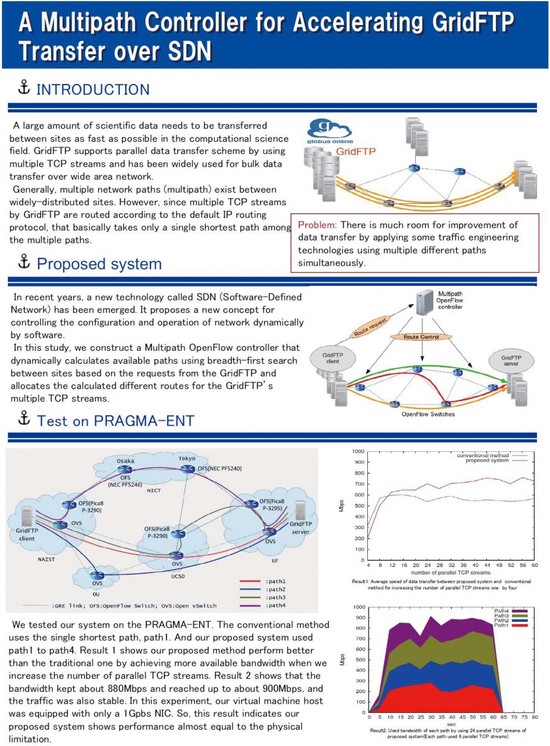

A Multipath Controller for Accelerating GridFTP Transfer over SDN

A large amount of scientific data needs to be transferred from one site to another as fast as possible in the computational science fields. High-speed data transfer between sites is very important, especially in the Grid computing field; GridFTP has been widely used for bulk data transfer over a wide area network. GridFTP achieves greater performance by supporting parallel TCP streams. Using parallel TCP streams improves the throughput of slow-start algorithms and lossy networks even on a single path.

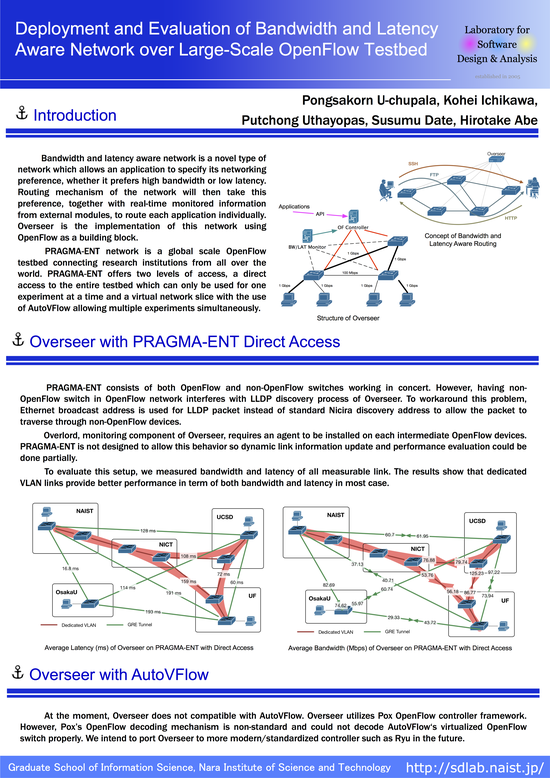

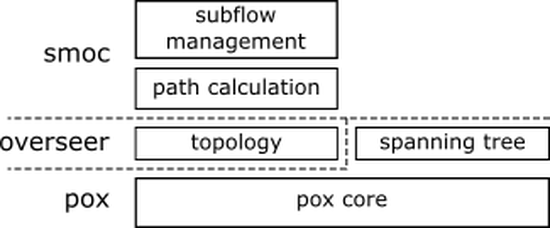

Overseer: SDN-Assisted Bandwidth and Latency Aware Route Optimization based on Application Requirement

Bandwidth and latency are two major factors that contribute the most to network application performance. Between each pair of switches in a network, there may be multiple paths connecting them. Each path has different proper- ties because of multiple factors. Traditional shortest-path routing does not take this knowledge into consideration and may result in sub-optimal performance of applications and underutilization of the network. We propose a concept of “bandwidth and latency aware routing”.

Multipath TCP routing with OpenFlow

Multipath TCP (MPTCP) is an extension to TCP that allows multiple TCP subflows to be created from a single application socket. This is done automatically by operating system kernel implementation, such as MPTCP Kernel. MPTCP has advantages over network layer and application layer multipathing. This is because MPTCP, unlike network layer mechanisms such as Equal-Cost Multipathing (ECMP), is capable of independent congestion control on multiple paths and therefore works well in unequal networks, which is the situation of PRAGMA-ENT.